Infrastructure-as-code (IaC) tools, such as Puppet and Chef, have fascinated me since I first became aware of them about three years ago, and I'm very excited that my organisation is now making use of them. Attending this session was thus an easy choice to make. Carlos Sanchez delivered a well balanced talk that was high-level enough for newbies to understand, but still provided enough meat to satisfy those familiar with the concepts. You can read the abstract and grab the slides from his blog.

I had two direct take-aways from his talk, one technical, the other cultural:

I had two direct take-aways from his talk, one technical, the other cultural:

Following his talk, Carlos and I met up to have a deeper discussion on the following practices.

I had two direct take-aways from his talk, one technical, the other cultural:

I had two direct take-aways from his talk, one technical, the other cultural:- Carlos shared how RSpec-puppet can be used to assert that packages are set up correctly - think acceptance-test-driven-development for puppet.

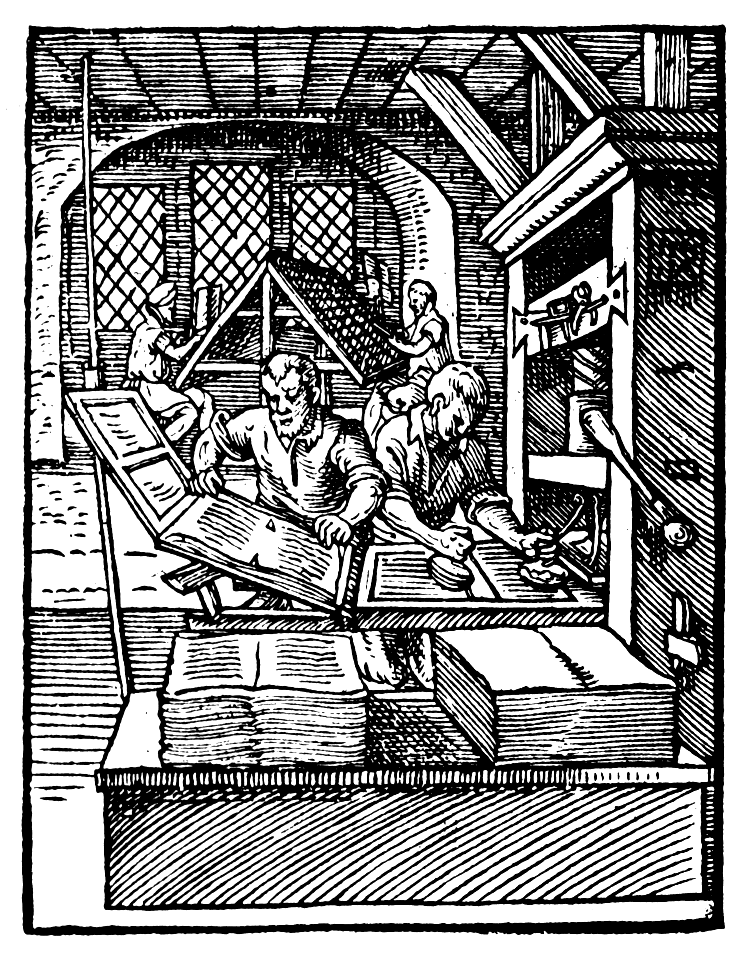

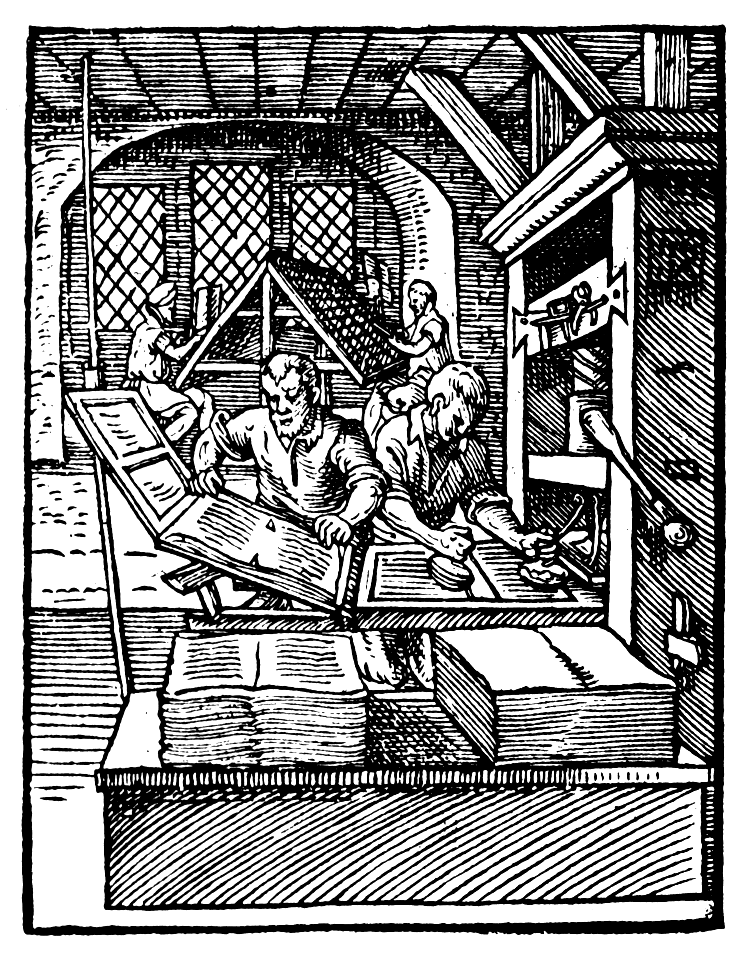

- There was a great quote and this picture at the very beginning, which gave the Printing Press as an example of a tool can lead to significant cultural change. The quote was from Patrick Debois: Tools can enable change in behaviour and eventually change culture. This is controversial point, because, in the case of BDD, for example, using tools too early can hinder or even prevent the right kind of culture change. Choosing the right tool at the right time is a difficult decision to make, but one we shouldn't be afraid of.

Following his talk, Carlos and I met up to have a deeper discussion on the following practices.